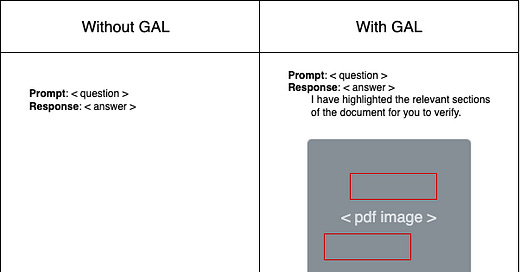

In this post I walk through a technical approach I’ve been exploring that I’ll call GAL - Generate, Attribute, Localize. It is the problem of getting LLMs (or foundation models more broadly) to do data/retrieval augmented generation while (1) giving attribution to the exact chunks of source input that informed that answer and then (2) localizing those chunks in the source documents they came from. I show how to make this work for any document type - not just text documents but unstructured and continuous valued data like audio, images, PDFs, and more.

Special thanks to early readers who gave valuable feedback - Derek Tu, Diamond Bishop (CEO of Augmend), Lee James, Brian Raymond (CEO of UnstructuredIO), Feynman Liang, and Ryan Dao.

Let’s start with an example.

GAL on Audio

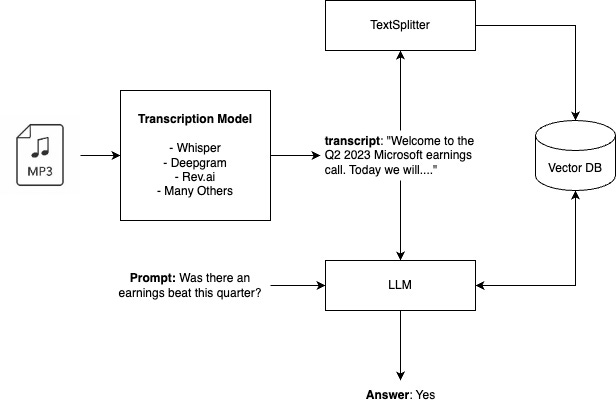

Say you have an audio file of a podcast and want a standard QA experience with that episode. Your workflow will look something like the following:

Transcribe: turn the unstructured/binary audio into text an LLM can understand

Create QA Chain: construct the prompt template and initialize the LLM

Chat: send the user question into the LLM along with the transcript

Or, at a larger scale you might chunk this and many other transcripts into smaller chunks, and then retrieve the most relevant ones based on vector similarity.

These kind of chat applications have become ubiquitous. Hackers everywhere are building some kind of “chat your data” application and they have become very easy to build with off-the-shelf LLM chains built into open-source libraries like LangChain.

docs = load_earnings_calls(args.input_path)

chain = load_qa_chain(OpenAI(temperature=0), chain_type="stuff")

query = "Was there an earnings beat this quarter?"

response = chain.run(input_documents=docs, question=query)

print(response)Link to Full Notebook of Traditional QA

What is far less common today is showing the user exactly what in the input influenced the output - grounding the answers to the source data and getting references/hyperlinks back to those chunks in the original input. Being able to do this is important for any application that benefits from human verification and human-in-the-loop user experiences.

How can we do this in practice?

Your first thought might be do chunking based Retrieval Augmented Generation and use metadata (start+end indices and timestamps) from the chunks you retrieved to generate the answer. Those chunks were the most relevant to the answer so we can just show the users those chunks, right?

Well, chunks are… messy. If you have chunks that are a few sentences, or even a few paragraphs, long - is it ALL relevant? What if all the content is related but the LLM decides to only incorporate the information from one out of three chunks?

Is all of this really relevant to answering the question “What was annual revenue?”

Wouldn’t it be better if we can say exactly what in the input influenced an LLMs answer? This approach would blend the benefits of LLMs natural chat interface with Google Featured Snippets style highlighting.

How might we do it? Like many problems with LLMs it turns out we can just… ask.

We will keep the general workflow from Figure 1 but update our prompt to ask the LLM to output “excerpts” from the original text.

Quick Aside on Output Parsers and Guardrails

Since we are now asking the language model to output multiple distinct fields in the output, I’m going to use one more layer of tooling called Output Parsers. langchain does have an OutputParser module, but so far I have liked the dedicated Guardrails library.

Guardrails lets you define an expected output schema, handles retries if the schema doesn’t match, and does the parsing for you. You can read more on their website.

Back to the Code for GAL on Audio

The guardrail file defines most of what is happening - the output structure, a prompt instructing the LLM to respond to a question with excerpts, and the template to interpolate our data into.

audio.rail

<rail version="0.1">

<output>

<list

name=""

description="">

<object name="transcript_qa">

<string name="answer" description="Answer to user question" />

<list

name="transcript_excerpts"

description="List of exact transcript excerpts from the original input transcript that influenced this answer">

<object>

<string name="excerpt" description="Exact exerpt from the input transcript. Maximum length of one paragraph."/>

</object>

</list>

</object>

</list>

</output>

<instructions>

You are a helpful assistant only capable of communicating with valid JSON, and no other text.

@json_suffix_prompt_examples

</instructions>

<prompt>

Given the following transcript and question from a user, please answer the question given the text in the transcript.

Additionally, output a list of excerpts from the transcript that influenced your answer.

The generated excerpts should be EXACTLY what they were in the input, even if there were spelling or grammatical errors.

The excerpts should be no longer than one paragraph.

Transcript:

{{transcript}}

Question:

{{user_question}}

@xml_prefix_prompt

{output_schema}

</prompt>

</rail>And then simply pass the input into the guardrail call

raw_llm_output, validated_output = guard(

openai.ChatCompletion.create,

prompt_params={"transcript": transcript_text, "user_question": question},

model="gpt-3.5-turbo-16k",

temperature=0,

)This gives us the GA in GAL - Generate and Attribute. To Localize, we can do a slightly more complex version of a string match.

def clean_string(text):

# Small helper to make string matching easier in case of small changes

text = text.translate(str.maketrans('', '', string.punctuation))

text = text.replace(" ", "")

text = text.lower()

return text

def localize_chunk(transcript, chunk_text):

"""

Localizes a str within a list

transcript: a list of {'word': str, 'start': float, 'end': float}

chunk_text: string

chunk_text exists as a string of words in the transcript list. each word in

chunk_text is a word in transcript[i]['word']

return the start and end indices within the transcript list

"""

chunk_words = chunk_text.split(" ")

for i, word_info in enumerate(transcript):

if clean_string(word_info['punctuated_word']) == clean_string(chunk_words[0]): # found start of string

found = True

for j in range(1, len(chunk_words)): # see if it continues

if clean_string(transcript[i+j]['punctuated_word']) != clean_string(chunk_words[j]):

found = False

break

if found:

return i, i + len(chunk_words) - 1

return None, NoneLinked to Full Notebook of GAL on Audio

Alternatively, you could include the timestamps in the original input and have the LLM generate the timestamps instead to do Attribution and Localization in a single step. So far I’ve found this to not work as well, but would love to see a more quantitive comparison of different approaches here.

GAL on PDFs

Now I’ll broaden the applications here by showing that this same approach works on other document type besides just audio transcribed to text. For this example we’ll use a PDF of the 2022 Microsoft Annual Report.

The same approach from above applies, but since we’re no longer using audio, we need something besides a transcription model to turn our PDF into natural language an LLM will understand.

Unstructured.IO is the best tool I’ve found for turning any document type (besides audio and video) into LLM friendly text - the "ETL for LLMs” as they call it. Unstructured has a simple partition interface that will infer the input file type, choose an appropriate model to parse it, and return the contents of the document as text.

Cloud vendors like AWS have similar hosted services like AWS Rekognition and AWS Textract, but Unstructured is, open-source, easy to get started with, and just raised $25M to continue refining their solution. (Get your API Key here)

Importantly for our problem, Unstructured is now attaching metadata for the coordinate system and coordinates of the text elements they extract from the document. In the audio example above we were implicitly using a coordinate system of time and timestamps as the coordinates. It’s worth taking a second to think about all of the coordinate systems you use implicitly as you look at images, listen to audio, browse the internet, and more. You use these to navigate data, but LLMs need them represented explicitly.

I find Unstructured’s coordinate system approach here really smart and think we’re just at the beginning of seeing what can be built with it.

Now for the code.

Step 1: Convert the PDF to text with Unstructured

elements = partition_via_api(filename=PDF_INPUT_FILE, api_key=UNSTRUCTURED_API_KEY, coordinates=True)

# Format the input

pdf_input = "\n".join([e.id + " -- " + e.text for e in elements])

# Small helper for localizing later

element_coordinates = {e.id: e.metadata.coordinates.points for e in elements}Step 2: Create Guardrail with Prompt and Output Format

<rail version="0.1">

<output>

<list

name=""

description="">

<object name="pdf_qa">

<string name="answer" description="Answer to user question" />

<list

name="pdf_excerpts"

description="List of exact PDF excerpts from the original input PDF that influenced this answer">

<object>

<string name="excerpt" description="Exact exerpt from the input PDF. Maximum length of one paragraph."/>

<string name="id" description="ID of the excerpt from the input"/>

</object>

</list>

</object>

</list>

</output>

<instructions>

You are a helpful assistant only capable of communicating with valid JSON, and no other text.

@json_suffix_prompt_examples

</instructions>

<prompt>

Given the following text extracted by parsing a PDF and question from a user,

please answer the question given the text in the PDF.

Additionally, you should output two things with your answer:

1. A list of excerpts from the PDF that influenced your answer.

The generated excerpts should be EXACTLY what they were in the input, even if there were spelling or grammatical errors.

The excerpts should be no longer than one paragraph.

2. The ID of the excerpt as given in the input

The input is formatted as a series of rows containing

element id -- element text

element id -- element text

PDF:

{{pdf_text}}

Question:

{{user_question}}

@xml_prefix_prompt

{output_schema}

</prompt>

</rail>Step 3: Call the LLM via Guardrails with the user input and PDF text + IDs

guard = gd.Guard.from_rail("pdf.rail")

question = "What is this form about?"

raw_llm_output, validated_output = guard(

openai.ChatCompletion.create,

prompt_params={"pdf_text": pdf_input, "user_question": question},

model="gpt-3.5-turbo-16k",

temperature=0,

)Step 4: Map back to original element coordinates with ID

if validated_output is None or "pdf_qa" not in validated_output:

print(f"Error: {validated_output}")

else:

answer = validated_output["pdf_qa"]["answer"]

excerpts = [e["excerpt"] for e in validated_output["pdf_qa"]["pdf_excerpts"]]

ids = [e["id"] for e in validated_output["pdf_qa"]["pdf_excerpts"]]

print(f"Answer: {answer}")

print(f"Excerpts: {excerpts}")

print(f"IDs: {ids}")Step 5: Construct Final Output

For a visual data type like PDFs you would probably do something more like highlight the bounding boxes on the PDF, but I will just output the coordinates as text to keep things simple here.

output_str = f"""

{answer}

I generated this answer based on the following excerpts:

"""

for excerpt, id in zip(excerpts, ids):

print(excerpt)

print(id)

output_str += f"\t{excerpt} found at {element_coordinates[id]} in {PDF_INPUT_FILE}\n"

print(output_str)Link to the full notebook of GAL on PDFs

Generalizing and Theorizing

Now that we’ve seen two concrete examples on different data types I want to generalize this idea to show that it can work on any document type and give names and requirements to the key components.

Components and Workflow

At a high-level we need the following pieces:

Source data - the file(s) you want an LLM to process

Discretizer - a tool that turns the (potentially continuous valued) original document into text elements with coordinates in a coordinate system

Note: This is distinct from a tokenizer that is used to turn text into the LLM readable tokens. As an analogy, you can go from Source Document —> Discretized Document —> Tokens like you can go from Source Code —> Assembly Language —> Machine Code. Each layer represents the same thing but in more machine readable formats the lower you go.

Coordinate System and Coordinates - A numerical system that will map the extracted elements back into the document they came from originally.

Prompt - A string that (1) explicitly asks for Generation(s) and Attributions to source data and (Optionally) explicitly asks for the localization data, unless you plan to perform localization in a second step and (2) Formats the data in an easy way for the LLM to understand, i.e. “<element id> — <element text>” or “<word timestamp> — <word text>”

Output Parser - a function that turns the single output string into your structured object of answer, excerpts, and excerpt localization metadata

Display Function - This can be as simple as a text string representation that interpolates the answer text with the excerpts or an application specific UI like a visual drawing of bounding boxes on a PDF or jumping to the relevant timestamp on an HTML Audio element of the audio.

Implementing this we end up with a flow looking like this -

Across Audio, Images, and PDFs, you will end up with a stack like this:

GAL vs Standard Retrieval Augmented Generation

The main differences between this and a standard Retrieval/Data Augmented Generation system are the introduction of the coordinate system for all data types, an updated prompt to ask for Attributions, and handling of imperfect Attribution generations.

The only difficult dependency is having a discretizer that can attach coordinates to the data it is discretizing from. This is not always the case. For example, the hosted Whisper API from OpenAI does not attach word level timestamps to the transcript. Plenty of other providers like Deepgram and Rev.ai do, however. This applies to the discretizer implementation you have processing each of your data types.

Note: The level of granularity from your discretizer defines the level of granularity you can localize important source chunks - i.e. if your transcription model only has sentence level timestamps you won’t be able to localize attributed input as fine-grained as you would be able to with transcription model outputting word-level timestamps.

Additionally, everything you need to do GAL instead of standard RAG can be inferred from the file itself so, given the right library, you shouldn’t need to do extra work as a developer.

i.e. -

Because it’s a PDF, you know you need an OCR model that outputs text and bounding box coordinates of the text.

Because it’s a PDF you are going to use PointSpace coordinates instead of PixelSpace because that’s what works natively with PDF files.

Because you are using PointSpace coordinates you will use element IDs instead of the raw coordinate values as input to the LLM - in this case because IDs are easier for the LLM to generate than 4 distinct PointSpace values.

Because you passed in (element ID, text) pairs you know what OutputParser to use

Because you have element IDs in the output you know to map them back to PointSpace coordinates in some temporary dictionary of ID → Coordinates

Looking Ahead

There is more to say on exactly where some of these implementations and interfaces should live, but this post is long enough already so I’ll leave those to a future post or open-source project :)

I hope this post was helpful in a few ways:

As a technical example of how to get LLMs to “show evidence” at a very fine-grained level for their generated output. I think this is an important element of explainability and building human-in-the-loop AI systems.

Providing a somewhat generalized view of what Unstructured has started with their CoordinateSystem approach for understanding where data is coming from and how to represent that to LLMs.

As inspiration for the interfaces that can be built to abstract away the concrete implementations of parsing, attributing, and localizing source attributions. I’ll be following up with more implementations here and am curious to see what others are doing in this space as well.

I’ll be hacking on a few follow-up pieces:

The interfaces I described to make it simple and consistent to do the GAL approach on any data type with the specific implementations of the coordinate system, prompt formatting, and output parsing abstracted away.

A UI that both shows the localized excerpts visually and provides a compelling human-in-the-loop experience with those excerpts

Fuzzy matching algorithms for each data type to handle LLM generated excerpts that are just slightly off from the original input

If you find this topic interesting, feel free to reach out and stay connected!

@tmcrosley on Twitter

crosleythomas on LinkedIn