Intelligent Application Design Patterns

5 Fullstack Patterns Emerging for AI-guided Knowledge Work Applications

This is a post about 5 Product x UX x Architecture patterns I see emerging for AI-first applications built for knowledge work - Chat, Generative UI, CoPilots, Headless Agents, and CoAgents. These patterns do not include others that are emerging for creatives - e.g. generative media with Runway, Midjourney, and Firefly - or for embedding AI components into existing/traditional software products - e.g. a summarization box, text classification, or sentiment analysis.

In my opinion, now is the most exciting time to be a full stack software engineer since the mobile era started around ~2007-2008 because multiple parts of the application stack need to be re-designed together. Mobile devices made new products possible by giving us an entirely new building block of connected, always present computers, but also required new interaction models that worked with new screen constraints, different input modalities, unreliable networking connections, and more.

LLMs and other foundation models are a new building block in their own right, opening up new product opportunities, but also requiring new UX challenges to be solved. This time we are dealing with new constraints like unpredictable/imperfect models, high latency from long running models, limited context lengths, and more.

New compute module, leads to newly possible products, leads to new interaction patterns, leads to new fullstack architectures.

There are enumerable application patterns we could talk through, but I am going to focus on the ones emerging for knowledge workers - people who spend most of their time (1) finding information (2) understanding information and (3) completing productive tasks based on that information.

I’m also going to try and tie together a full stack picture for these patterns -

What types of user problems does it solve?

What UX patterns work well for this product and with this software architecture?

What software architecture supports this pattern?

We will start simple with Chat and work our way up to more advanced patterns like CoPilots, CoAgents, and Generative UI. At the end, you should hopefully be able to start with a user problem you have and jump a little quicker to what type of software and UX patterns you can use to build an AI-first application to address that problem.

As you wrap your head around each individual pattern, also think about what differentiates the patterns from each other. Why do multiple patterns exist and which would you pick to solve your particular user problem?

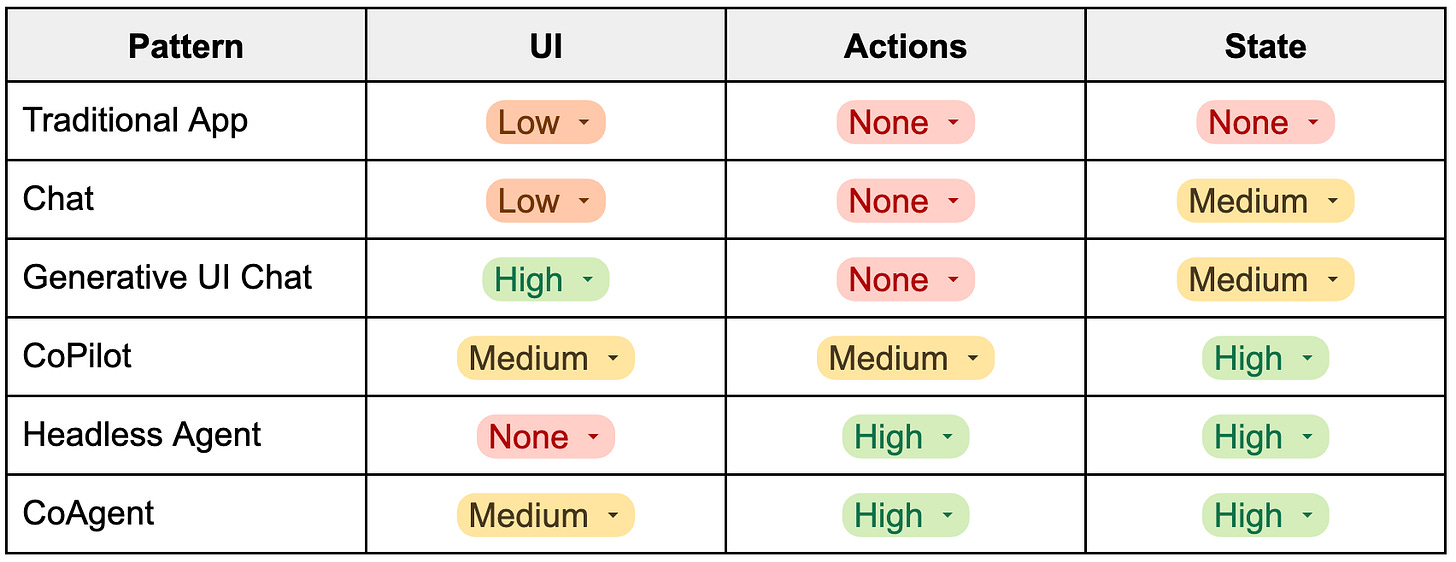

The two key differentiators I see are:

Flexibility - having an intelligent component in our application gives us the flexibility to make the application behave differently every time a user is trying to do something slightly different.

UI: Instead of having the same components render every time like a traditional software application, should we intelligently render only the ones relevant to the user’s query?

Actions: Instead of executing the same workflow of functions on every input, or button click, does the application dynamically choose what functions to call?

State: Do we always retrieve and store the same data or can we choose what context is needed based on what the user is trying to accomplish?

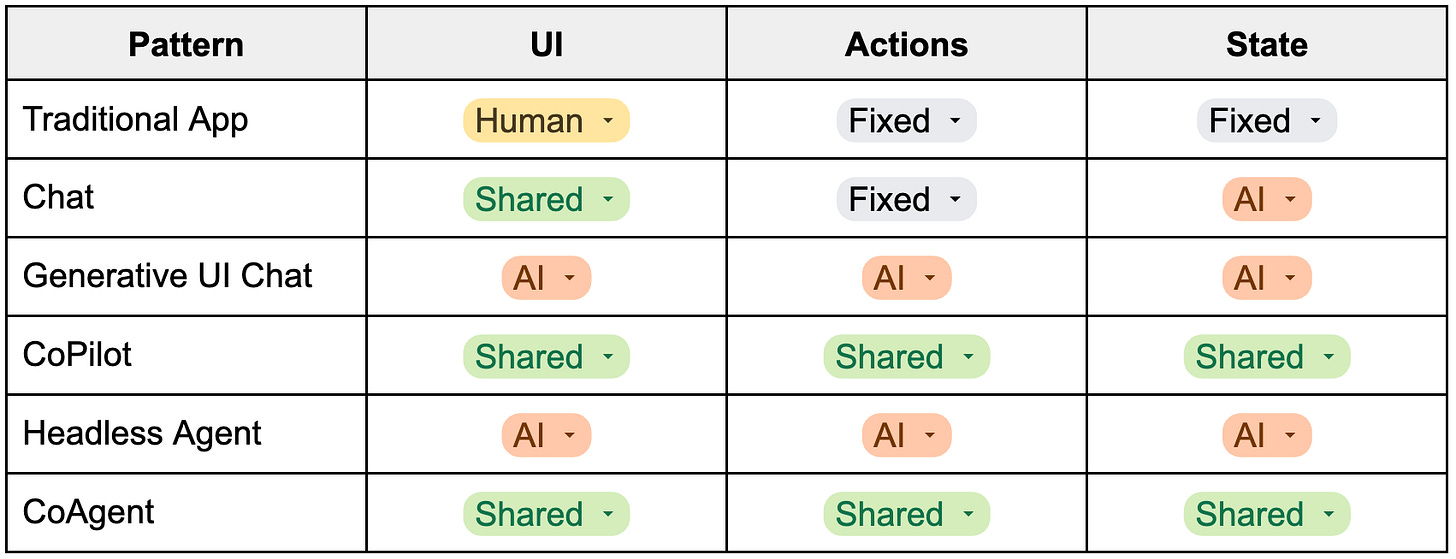

Who is in control - for every dimension of flexibility above, is it the AI making decisions when things are flexible, the human user, or some combination of the two?

As a preview, I’ve bucketed the patterns into their varying levels of flexibility and control in the following two tables.

Pattern Comparison: Level of Flexibility

Pattern Comparison: Who’s in Control

Keep in mind there is overlap in the patterns - e.g. Chat is a part of all other patterns and Generative UI can be used in a CoPilot or CoAgent apps, but try to understand each individually and then combine them together later.

Enough preamble, let’s jump in…

Chat

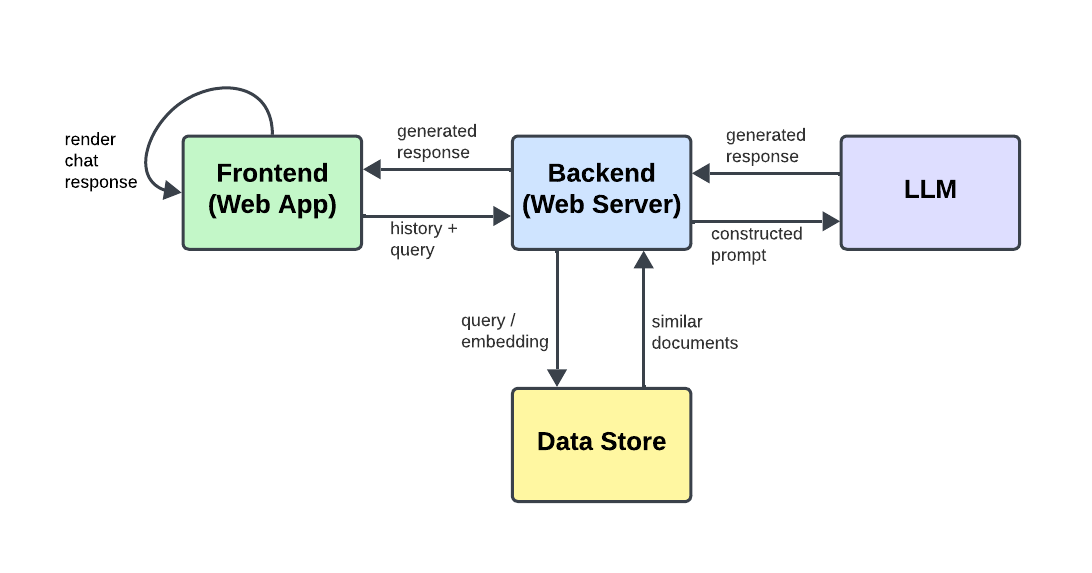

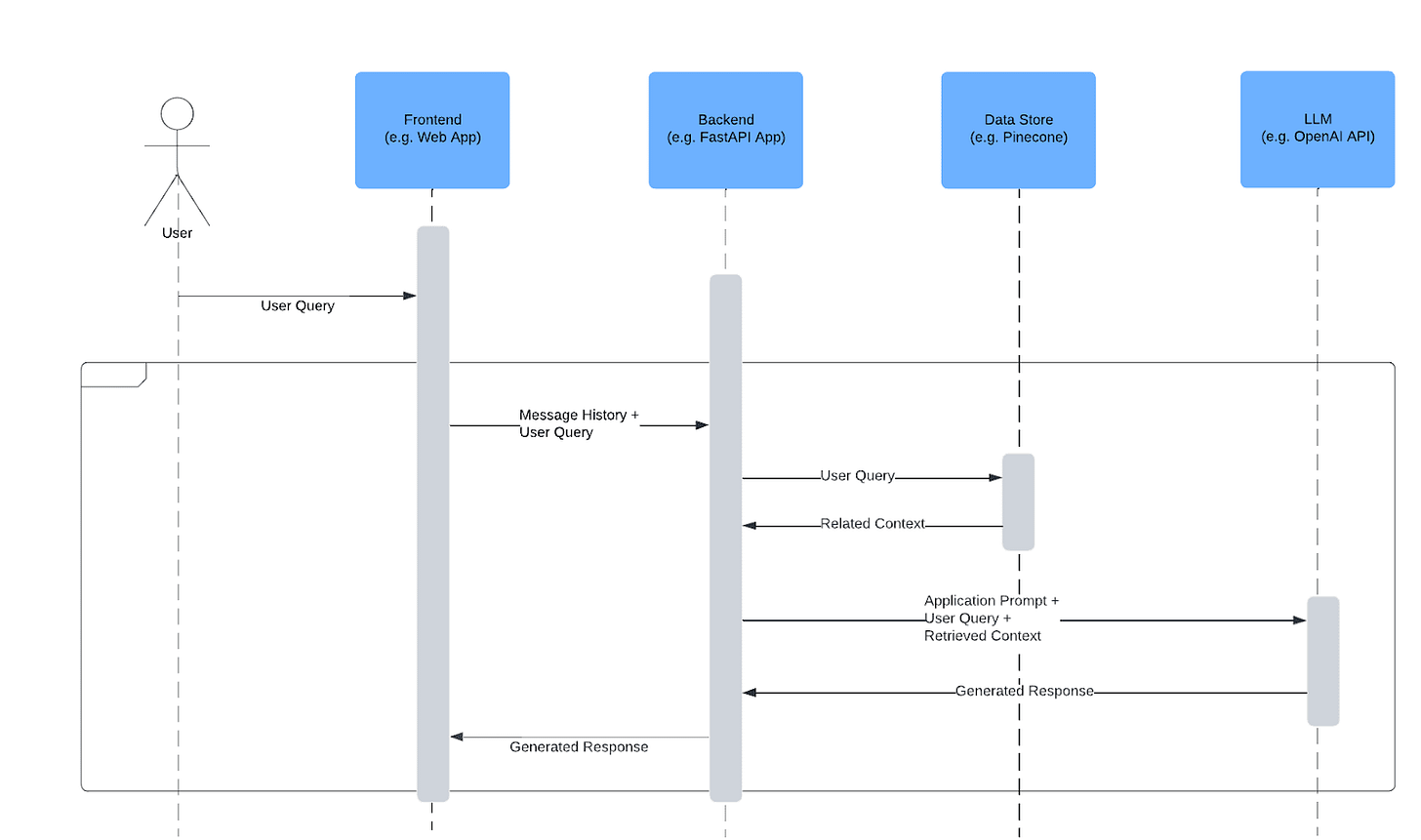

Pure chat products are most people’s introduction to building an intelligent application and a fairly well known pattern by now. You can create a simple UI of back and forth chat bubbles, connecting it to a hosted LLM API and call it a day.

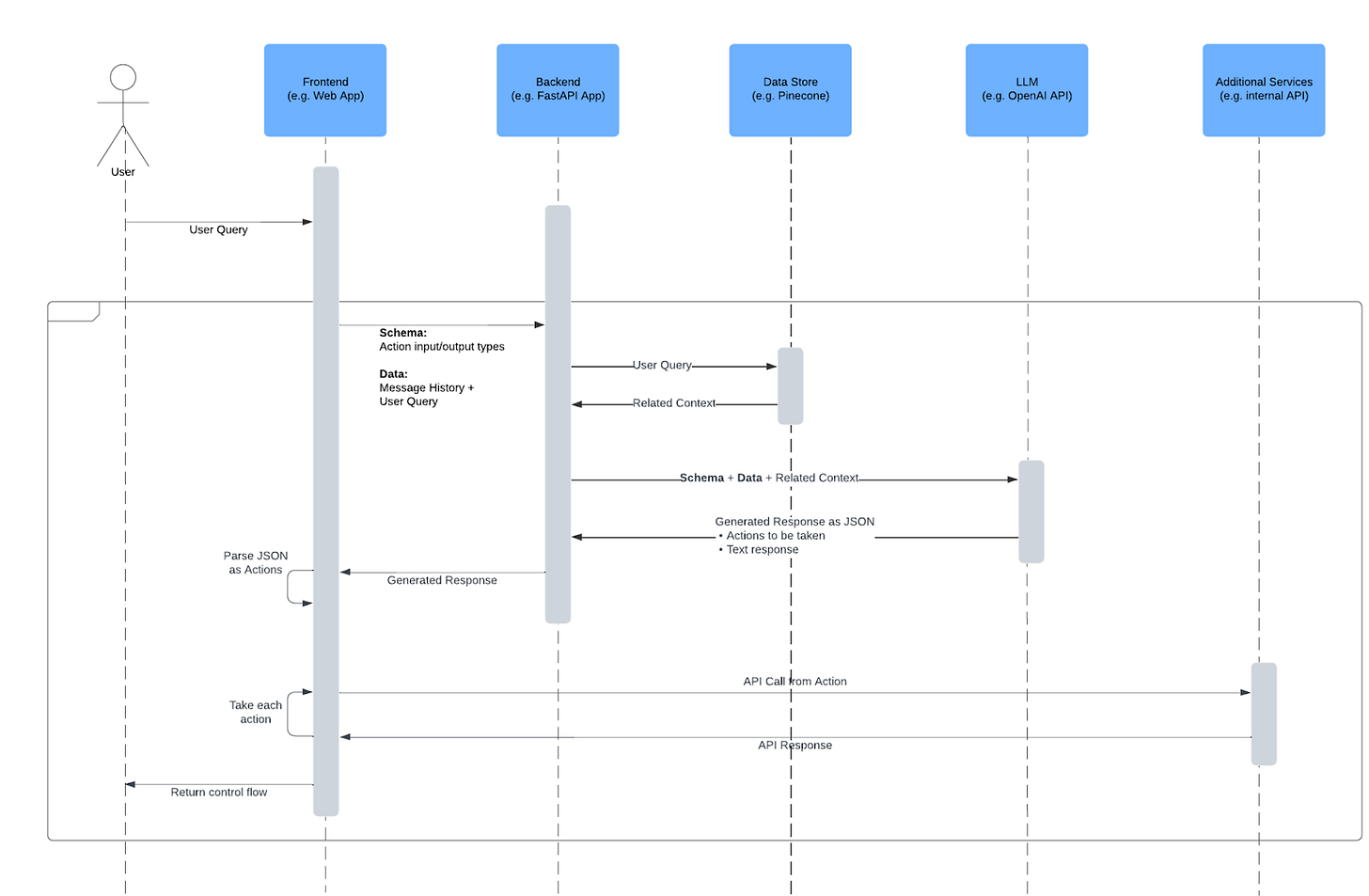

For any product of novel value you will likely add a RAG (Retrieval Augmented Generation) step before the LLM call to retrieve relevant, related data that you feed to the LLM along with the user prompt and chat history.

Implementing RAG has many different options which have been covered extensively elsewhere - e.g. 8 Retrieval Augmented Generation (RAG) Architectures You Should Know.

On our key differentiators, chat products score:

Flexibility

UI: Low - the UI is typically a fixed set of user and AI chat bubbles in succession, but simple tweaks like rendering the LLM response in markdown (try react-markdown) provide some flexibility within each response chat bubble. I consider this low flexibility relative to the other patterns but getting an LLM to respond in markdown and rendering it as-is can be subtly powerful

Actions: None - there is a fixed set of calls from the user submitting their prompt, to a retrieval step, the LLM call, and finally returning the results to the user

State: Medium - chat history being passed into the LLM ends up giving these applications a large amount of state flexibility. The user can ask for initial context at the start of a thread (e.g. “What is the capital of Texas?”) and then later calls can use this state (e.g. “What is the monthly weather there?”) even though the application was not pre-programmed to store state for that question.

Control

UI: Shared - humans drive the presence of new input and response bubbles while the AI determines the format (e.g. in the Markdown rendering case) of the response

Actions: Fixed - this is a fixed set of calls on each user request

State: AI - what the AI decides to put in its response implicitly becomes the state for future calls

Product Examples

Despite the simplicity of this pattern, it unlocks a wide range of products. A few common ones include:

Information Retrieval:

Summarizing documents or articles

Extracting key information from text

Creative Content Generation:

Writing marketing copy, social media posts, and other marketing materials

Generating product descriptions, blog posts, and other content for websites

Creating scripts for videos and podcasts

Language Translation:

Translating text and speech in real-time

Providing translations that are culturally appropriate and accurate

Architecture

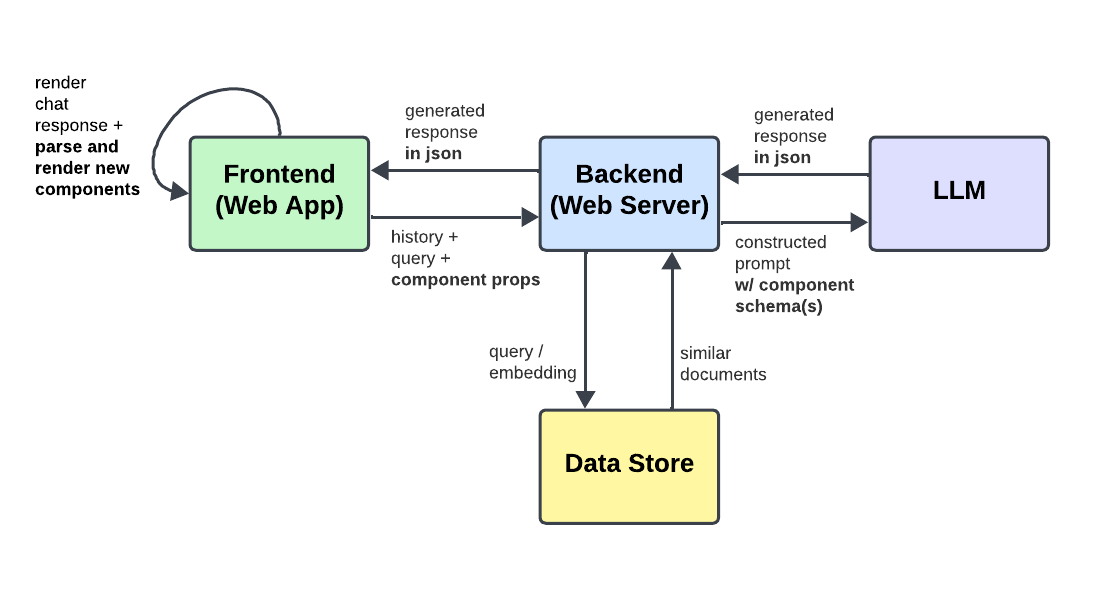

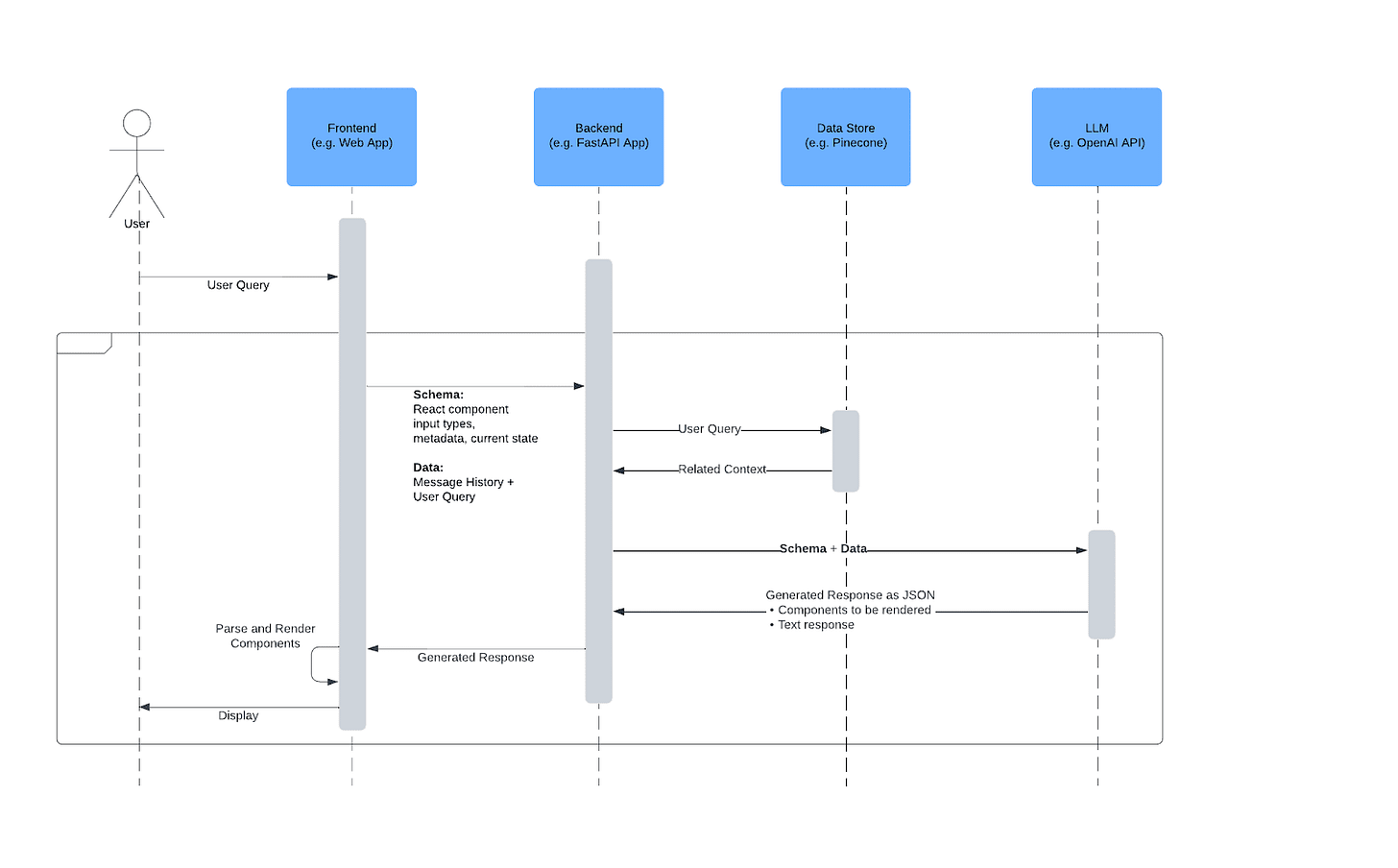

Network Diagram

Generative UI Chat

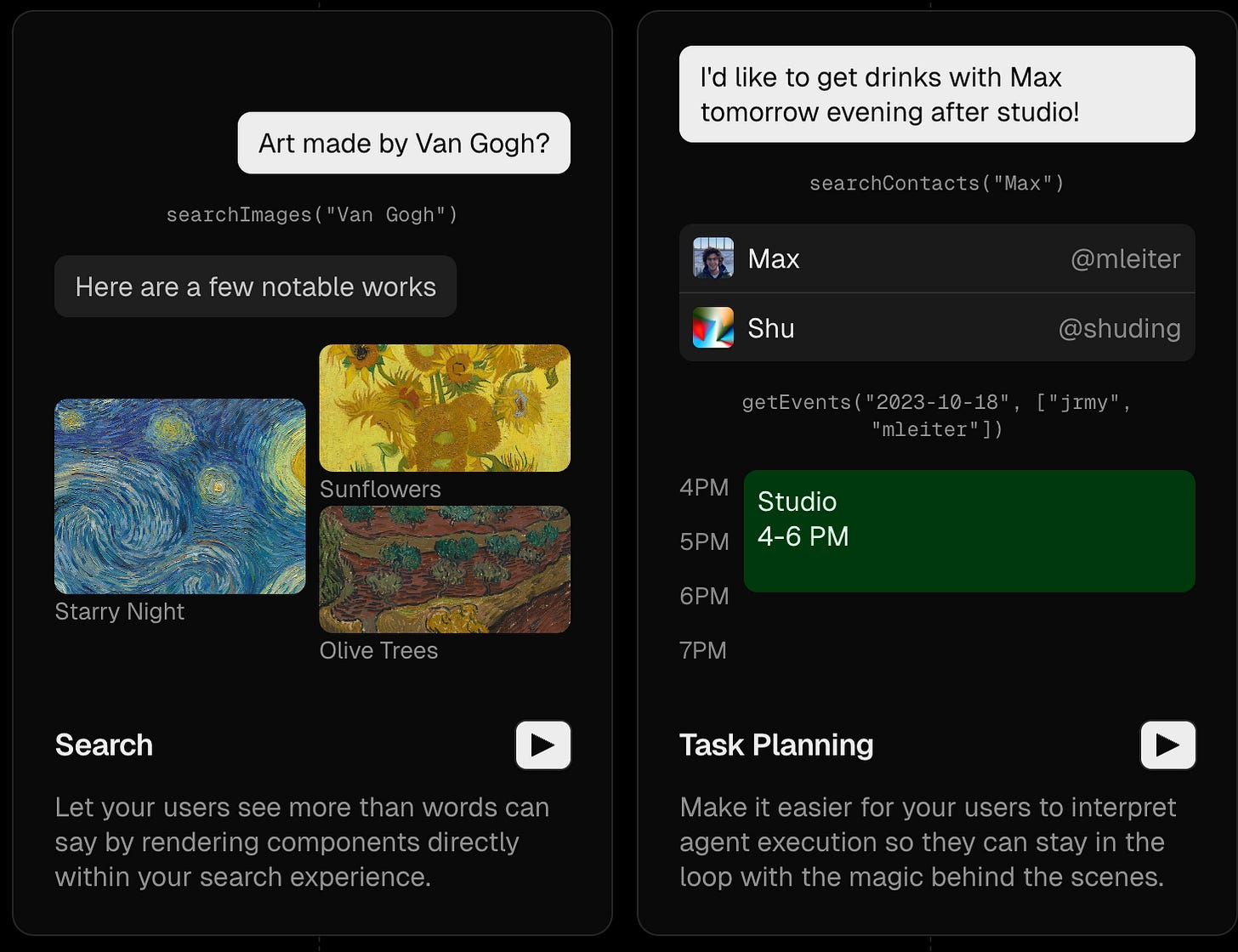

Generative UI Chat is similar to the original Chat design, but we give the LLM the ability to render UI components through its generated output. This is done by providing the interface definitions of available components (think typescript interfaces of React components) in the prompt, so the LLM can include in its response a list of components and corresponding input values that it thinks should be rendered. All it takes on the frontend is to parse the structured response and render the requested elements.

Side-by-side example of different interfaces generated dynamically to respond to different user queries. From Introducing AI SDK 3.0 with Generative UI support

Key Differentiators

Flexibility

UI: High - this is the key reason for Generative UI. LLMs can prescribe components to be rendered conditionally based on the context of what the user is trying to accomplish.

Actions: Low - although Generative UI naturally fits in well with CoPilots / CoAgents (which we’ll discuss below) let’s assume for now we aren’t giving the LLM the ability to call functions on its own. It can however generate actionable UI elements, if provided, like buttons, links, etc.

State: Medium - Generative UI is typically found in a chat-like experience that keeps history similarly to how we discussed above for Chat

Control

UI: AI - the LLM generates the UI components that should be rendered. The human developer has some control here in deciding which components to tell the LLM about, but not the human user of the application

Actions: AI - the LLM can decide to render UI components that are actionable, but otherwise the action space is pretty constrained

State: AI - what the AI decides to put in its response implicitly becomes the state for future calls

Product Examples

Search Engines - perplexity.ai is a good example

Workflow Generators - like Zapier AI

Meeting Summaries

Architecture

Network Diagram

Favorite Libraries

CoPilot

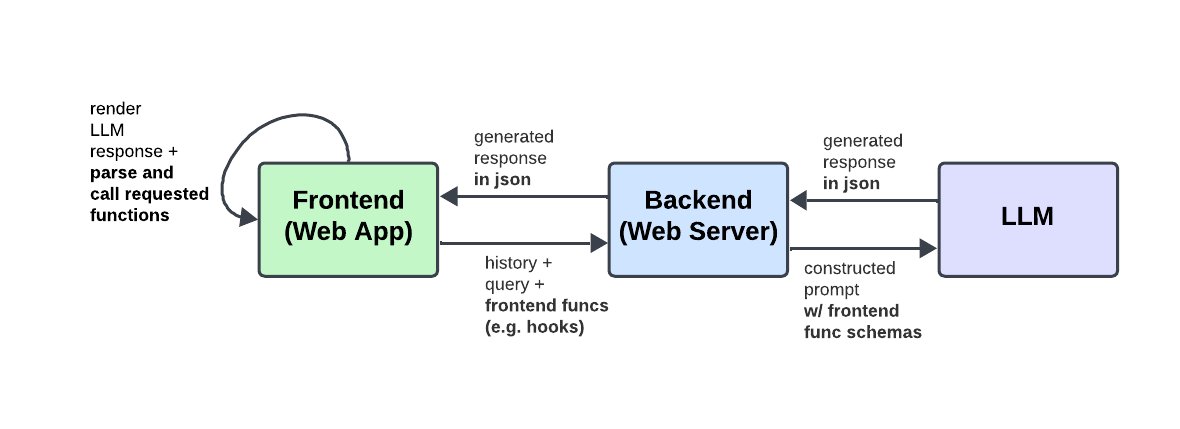

CoPilots are where we start introducing the ability for LLMs to call functions on their own. This is done by providing the function interface definitions in the context to the LLM, similar to how we provided frontend component interfaces for Generative UI. Then, we parse the response and call any requested functions (e.g. button clicks, API calls, etc.) that the LLM thinks should be taken in response to the user’s query.

CoPilots can be implemented with the function calling happening in either the frontend or backend, but for now let’s think about this happening only at the frontend layer. We will distinguish purely frontend CoPilots that mirror, but automate, all the actions that a human user has access to on the frontend from Agents and CoAgents that run a significant, and potentially different, amount of their processing on the backend, separate from the user’s control.

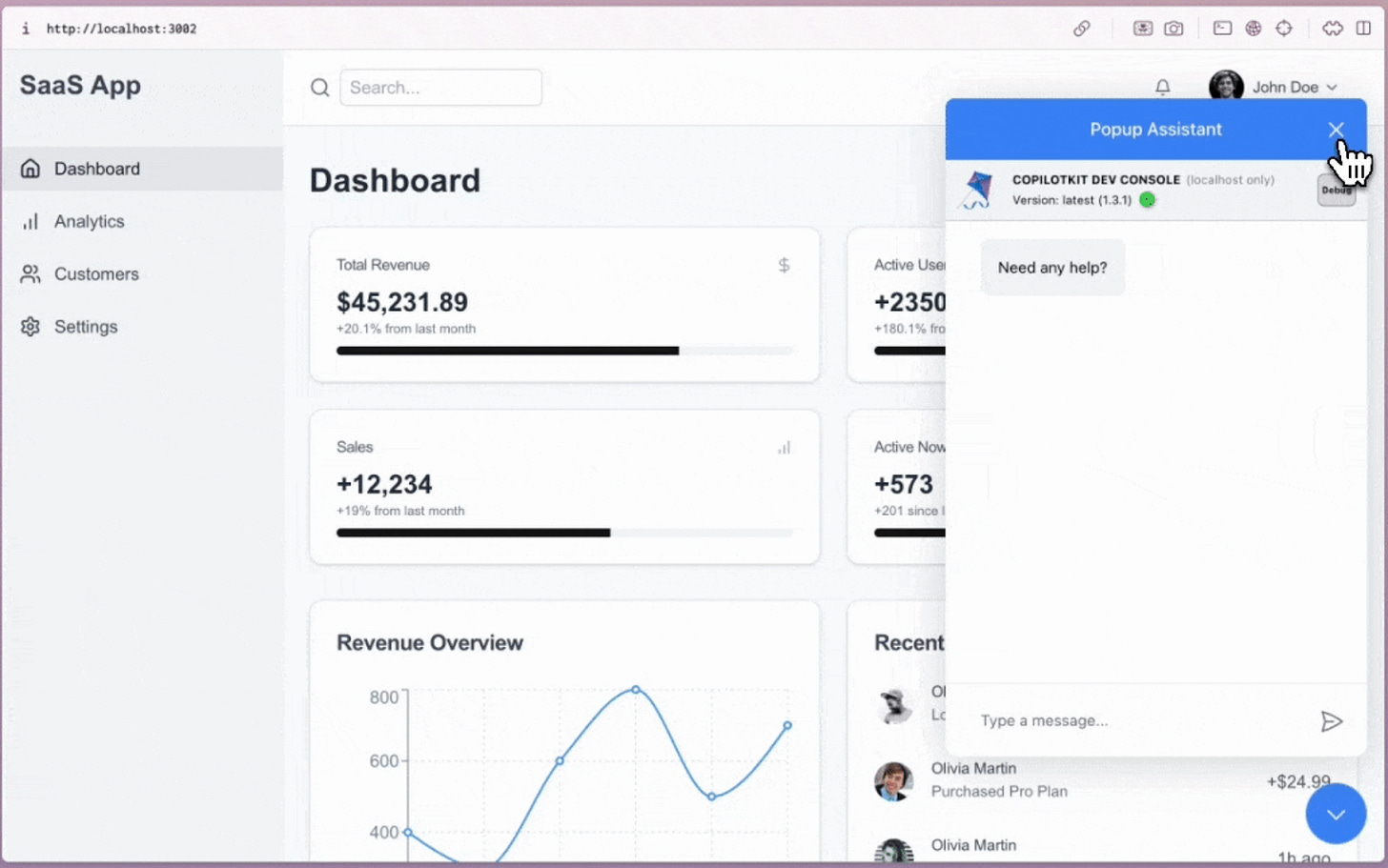

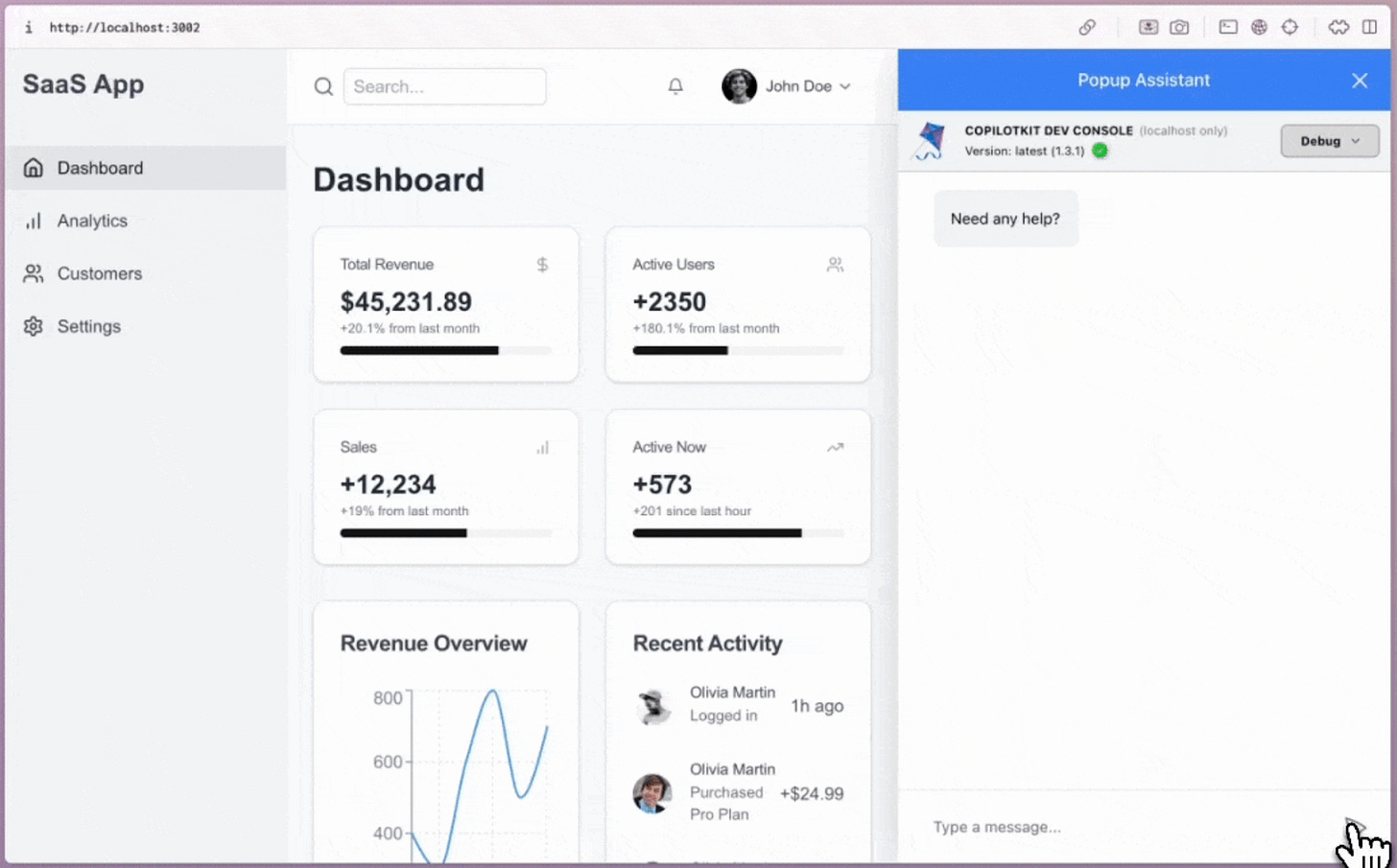

The UI also shifts significantly with this pattern reverting back from a monolithic chat experience to a more traditional looking software application with chat as either a pop-up window or sidebar that supports the existing UI.

Examples of chat UI pop-ups and sidebars from the CopilotKit docs.

Pop Up Chat UI

Sidebar Chat UI

Key Differentiators

Flexibility

UI: Medium - the actions provided to the CoPilot can directly alter the existing UI elements, but typically it is not creating/destroying those elements unless pared with Generative UI

Actions: Medium - the LLM can prescribe actions that should be taken, but those actions are typically scoped to the actions/hooks available within the existing application

State: High - the running history of chat is similar to pure chat experiences, but the CoPilot additionally the UI element state as added context

Control

UI: Shared - the user can continue using the application like normal, but the AI gains the ability to edit the UI as well

Actions: Shared - similar to UI, both user and AI can take the available actions

State: Shared - same again as for UI and Actions

Product Exapmles

Writing code

Data analysis and visualization

Financial analysis

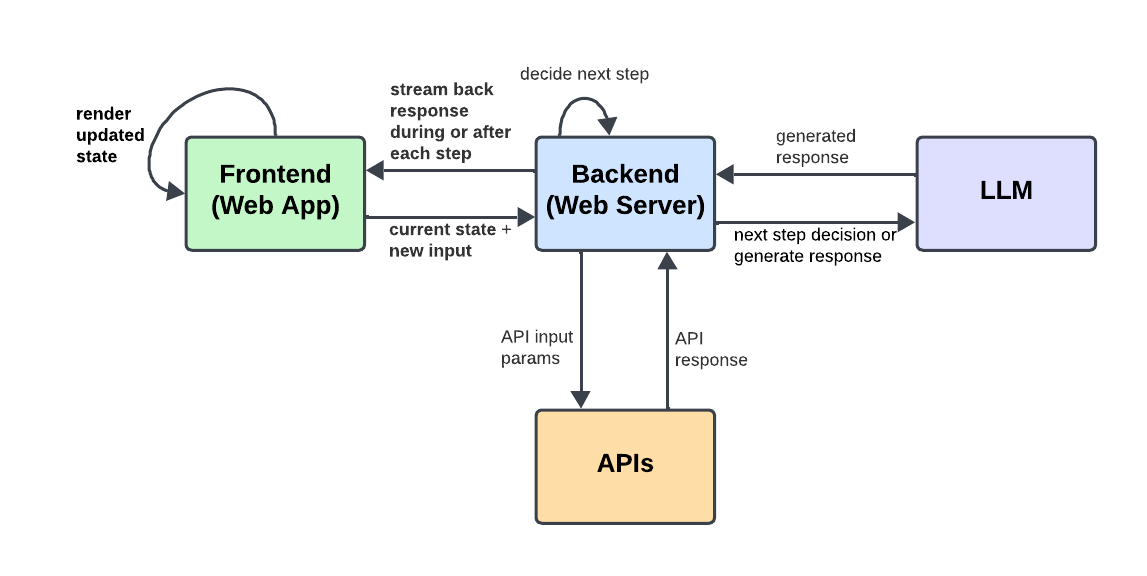

Architecture

Network Diagram

Headless Agent

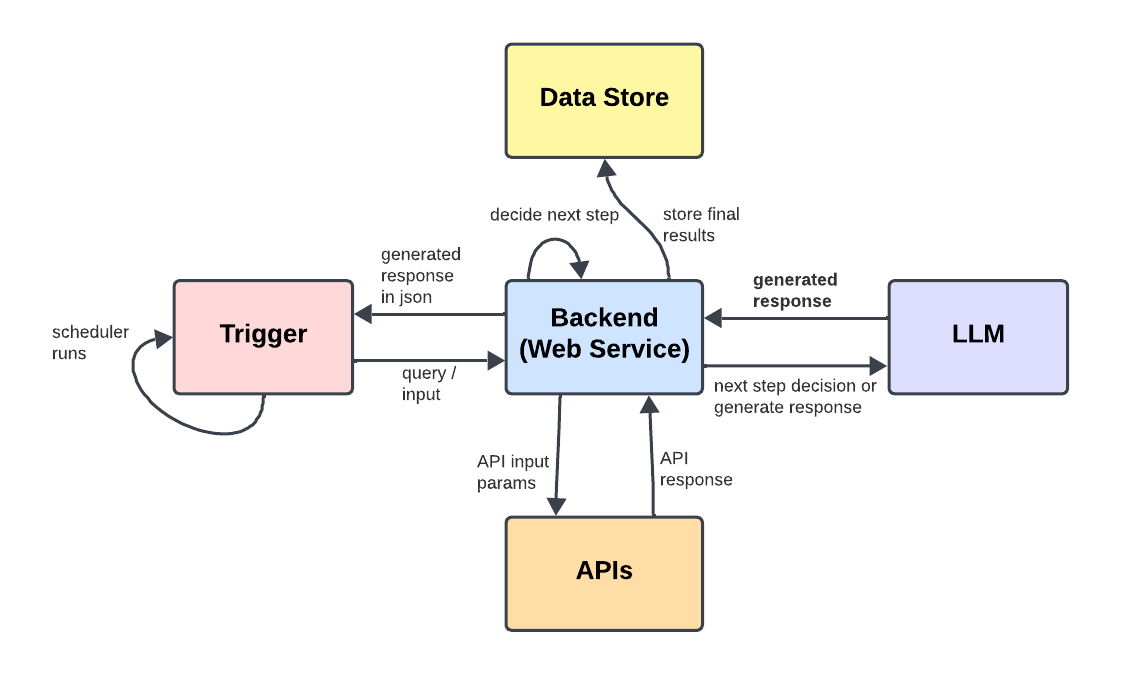

Headless Agents introduce our first pattern that does not have a UI a user is actively interacting with. Headless Agents are long-running backend processes that are started by a backend trigger (e.g. daily/hourly schedule, new user signup, etc.) or still from a frontend action - but with an expectation that the user isn’t waiting around to see the results.

These types of agents are useful for long-running workflows that do not require iterative human feedback or refinement.

Product Examples

Daily Report Generation - gathering documents, posts, message, etc. generated throughout the data, synthesizing them into a report, and sending them to various interested parties

Data Enrichment - on data ingestion extracting key elements of the data and dynamically choosing endpoints to call to do further enrichment and/or storage of the data

Incident Response - agents that are triggered by incidents like operational software issues or security threads, query various data sources based on the incident information, take remediation steps, and alert relevant stakeholders depending on the outcome

Key Differentiators

Flexibility

UI: None - there is no UI!

Actions: High - agents are given autonomy to decide what actions to take next in order to achieve their goal. The flexibility is only limited by what tools they have available and can easy be made highly flexible by giving the agent access to a REPL, web browser, etc.

State: High - agents must be paired with some type of memory system and that memory is typically very flexible - running text memory, a local filesystem, a read/write API for memories, etc.

Control

UI: Fixed - there is no UI!

Actions: AI - once started, the headless Agent is the only thing in control of its actions. The only human control comes in the agent’s design before it’s started

State: AI - the agent decides which intermediate to store in each consecutive run in whatever storage available it has access to

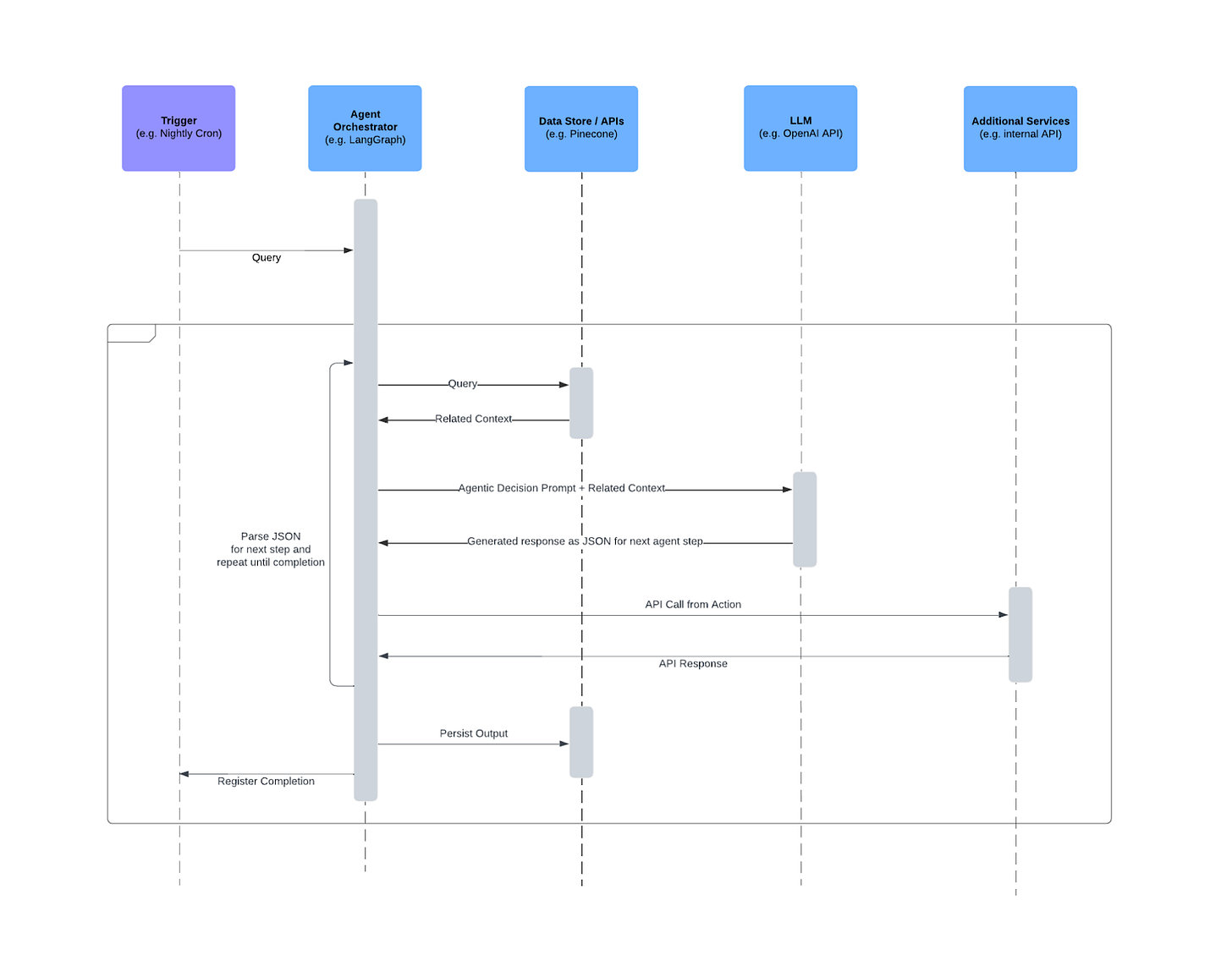

Architecture

Network Diagram

CoAgent

CoAgents are the newest emerging pattern with a lot of opportunity for powerful experiences. I was first introduced to this term in the great tooling work being done at CopilotKit. In the long run, what we’ll discuss as being “CoAgents” might get colloquially bundled underneath the “CoPilot” term, but I think at this stage of development they are worth keeping separate.

The CoAgents pattern distinguishes itself from other CoPilots by having a stateful, long-running agent in the backend that a user on the frontend needs to interact with on an ongoing basis. You can think of this as a merging of the CoPilot and Headless Agent patterns.

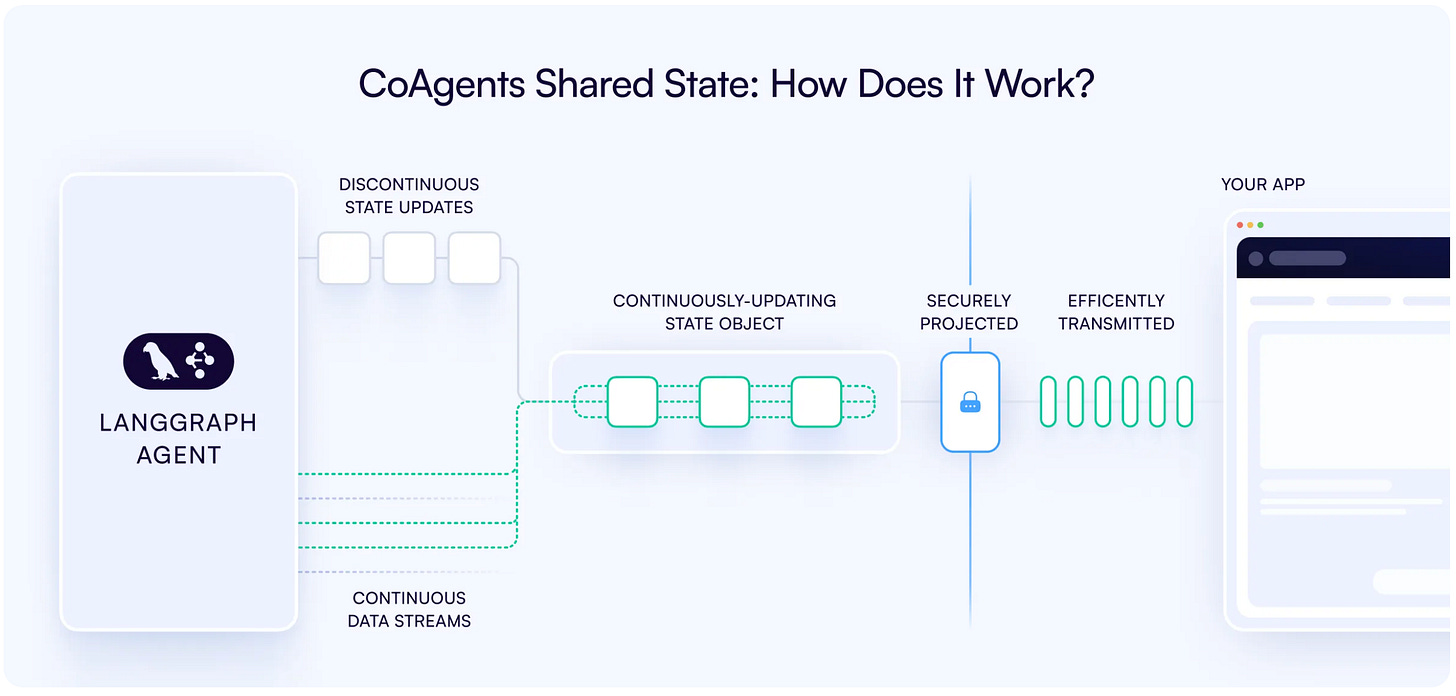

The key insight here is that the state of your application is replicated twice - once in the frontend web application where your user has direct access, and once in the backend where the state is being used by the agentic graph. The interesting technical problems come in how you store, sync, update, and interrupt the state between the frontend and backend to make a product experience that is smooth, reliable, and fast.

On this topic you should really just go read the CopilotKit CoAgent docs - the work they’re doing is so so good.

Product Examples

Coding - Replit AI Agent, Cursor, Vercel v0

Research and Analsysis - Hedgineer, Athena Intelligence

Travel Planners

Key Differentiators

Flexibility

UI: Medium - like CoPilots, CoAgents have some control over UI updates. This can easily be considered High if paired with Generative UI

Actions: High - running the agentic processing of your CoAgent in the backend gives you lots of flexibility in its action space - full code interpreters, internal + external APIs, etc.

State: High

Control

UI: Shared - as state is streamed back it updates the UI, but users can continue interacting with the UI like before as well

Actions: Shared - the agent autonomously executes actions in the backend that mirror the actions the user can continue to take on the frontend

State: Shared - back to the key insight, this state is updated and synced between the user and agent continuously. How to resolve conflicts if both are making updates is where it gets tricky

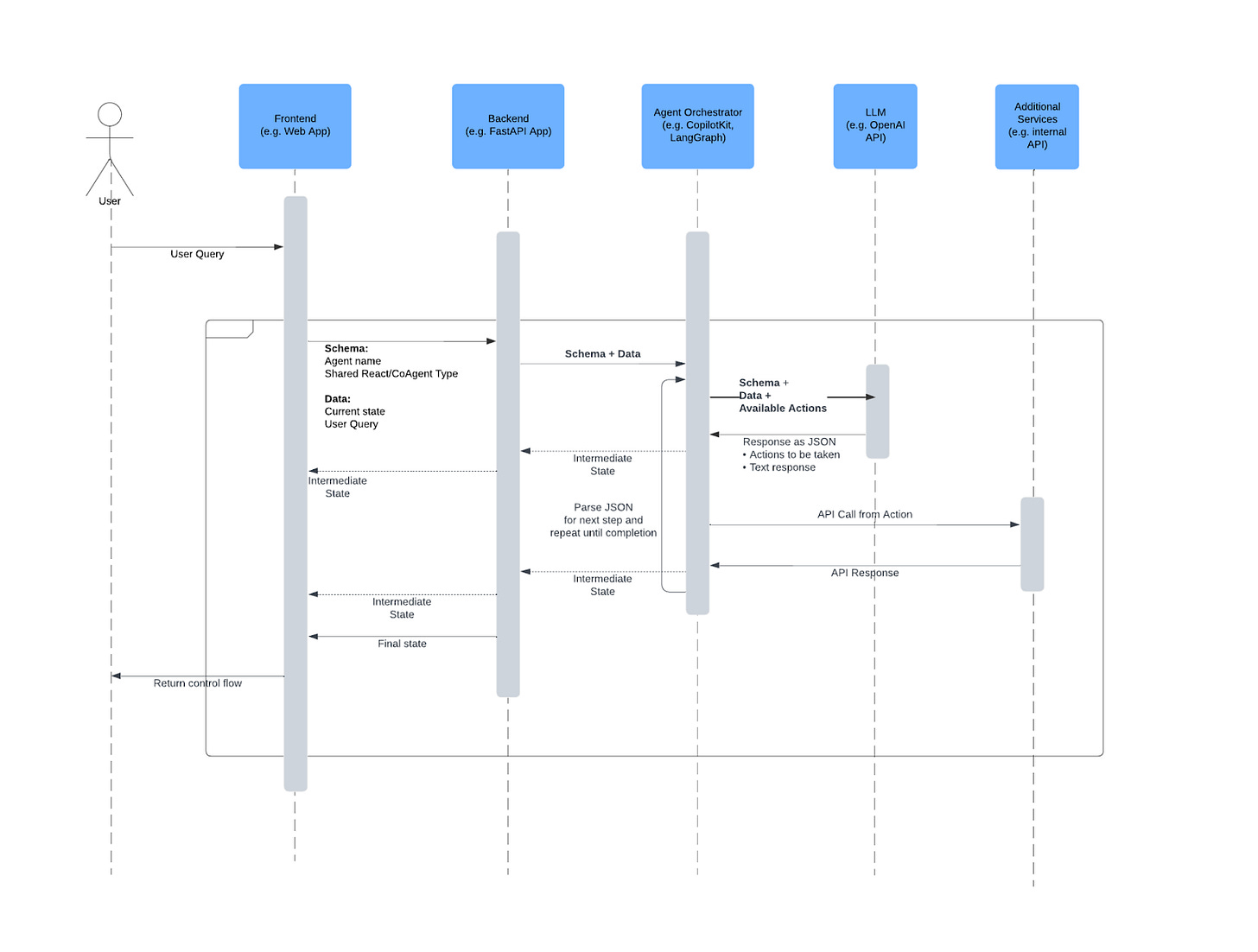

Architecture

Network Diagram

As AI continues to transform the landscape of knowledge work, understanding these emerging patterns—Chat, Generative UI, CoPilots, Headless Agents, and CoAgents—can serve as a roadmap for designing effective AI-first applications. Each pattern represents a balance of flexibility and control. Figuring out the right balance for your use case is where the interesting product development work begins.

These patterns are evolving quickly, but I hope this gave you some foundation to keep up with what will be a fun and fast-paced 2025.

That’s it for now! Time to get back to building.